REFERENCES

- [1] A. A. Laghari, A. K. Jumani, and R. A. Laghari, (2021) “Review and state of art of fog computing" Archives of Computational Methods in Engineering 28(5): 3631–3643. DOI: doi.org/10.1007/s11831-020-09517-y.

- [2] A. A. Laghari, K.Wu, R. A. Laghari, M. Ali, and A. A. Khan, (2021) “A review and state of art of Internet of Things (IoT)" Archives of Computational Methods in Engineering: 1–19. DOI: doi.org/10.1007/s11831-021-09622-6.

- [3] M. B. Janjua, A. E. Duranay, and H. Arslan, (2020) “Role of wireless communication in healthcare system to cater disaster situations under 6G vision" Frontiers in Communications and Networks 1: 610879. DOI: doi.org/10.3389/frcmn.2020.610879.

- [4] T. Li, A. K. Sahu, M. Zaheer, M. Sanjabi, A. Talwalkar, and V. Smith, (2020) “Federated optimization in heterogeneous networks" Proceedings of Machine Learning and Systems 2: 429–450.

- [5] X. Zhang and X. Luo, (2020) “Exploiting defenses against GAN-based feature inference attacks in federated learning" arXiv preprint arXiv:2004.12571: DOI: doi.org/10.48550/arXiv.2004.12571..

- [6] L. Lyu, H. Yu, and Q. Yang, (2020) “Threats to federated learning: A survey" arXiv preprint arXiv:2003.02133: DOI: doi.org/10.48550/arXiv.2003.02133.

- [7] J. Huang, R. Talbi, Z. Zhao, S. Boucchenak, L. Y. Chen, and S. Roos. “An exploratory analysis on users’ contributions in federated learning”. In: 2020 Second IEEE International Conference on Trust, Privacy and Security in Intelligent Systems and Applications (TPSISA). IEEE. 2020, 20–29. DOI: doi.org/10.1109/TPSISA50397.2020.00014.

- [8] Y. Liu, X. Yuan, R. Zhao, Y. Zheng, and Y. Zheng, (2020) “Rc-ssfl: Towards robust and communication efficient semi-supervised federated learning system" arXiv preprint arXiv:2012.04432: DOI: doi.org/10.48550/arXiv.2012.04432.

- [9] R. Nazir, K. Kumar, S. David, M. Ali, et al., (2021) “Survey on wireless network security" Archives of Computational Methods in Engineering: 1–20. DOI: doi.org/10.1007/s11831-021-09631-5.

- [10] Z. A. Hamed, I. M. Ahmed, and S. Y. Ameen, (2020) “Protecting windows OS against local threats without using antivirus" relation 29(12s): 64–70. DOI: doi.org/10.3389/frcmn.2020.610879.

- [11] I. M. Ahmed and M. Y. Kashmoola. “Threats on Machine Learning Technique by Data Poisoning Attack: A Survey”. In: International Conference on Advances in Cyber Security. Springer. 2021, 586–600. DOI: doi.org/10.1007/978-981-16-8059-5_36.

- [12] M. Waqas, K. Kumar, A. A. Laghari, U. Saeed, M. M. Rind, A. A. Shaikh, F. Hussain, A. Rai, and A. Q. Qazi, (2022) “Botnet attack detection in Internet of Things devices over cloud environment via machine learning" Concurrency and Computation: Practice and Experience 34(4): e6662. DOI: doi.org/10.1002/cpe.6662.

- [13] M. Russinovich, E. Ashton, C. Avanessians, M. Castro, A. Chamayou, S. Clebsch, M. Costa, C. Fournet, M. Kerner, S. Krishna, et al., (2019) “CCF: A framework for building confidential verifiable replicated services" Technical report, Microsoft Research and Microsoft Azure:

- [14] S. V. A. Amanuel and S. Y. A. Ameen, (2021) “Deviceto-device communication for 5G security: a review" Journal of Information Technology and Informatics 1(1): 26–31.

- [15] E. Bagdasaryan, A. Veit, Y. Hua, D. Estrin, and V. Shmatikov. “How to backdoor federated learning”. In: International Conference on Artificial Intelligence and Statistics. PMLR. 2020, 2938–2948.

- [16] Y. Zhao, J. Chen, J. Zhang, D. Wu, M. Blumenstein, and S. Yu, (2022) “Detecting and mitigating poisoning attacks in federated learning using generative adversarial networks" Concurrency and Computation: Practice and Experience 34(7): e5906. DOI: doi.org/10.1002/cpe.5906.

- [17] M. Goldblum, D. Tsipras, C. Xie, X. Chen, A. Schwarzschild, D. Song, A. Madry, B. Li, and T. Goldstein, (2022) “Dataset security for machine learning: Data poisoning, backdoor attacks, and defenses" IEEE Transactions on Pattern Analysis and Machine Intelligence: DOI: doi.org/10.1109/TPAMI.2022.3162397.

- [18] Y. Jiang, Y. Li, Y. Zhou, and X. Zheng. “Sybil Attacks and Defense on Differential Privacy based Federated Learning”. In: 2021 IEEE 20th International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom). IEEE. 2021, 355–362. DOI: doi.org/10.1109/TrustCom53373.2021.00062.

- [19] X. Chen, Y. Duan, R. Houthooft, J. Schulman, I. Sutskever, and P. Abbeel, (2016) “Infogan: Interpretable representation learning by information maximizing generative adversarial nets" Advances in neural information processing systems 29:

- [20] G. Sun, Y. Cong, J. Dong, Q. Wang, L. Lyu, and J. Liu, (2021) “Data poisoning attacks on federated machine learning" IEEE Internet of Things Journal: DOI: doi.org/10.1109/JIOT.2021.3128646.

- [21] Y. Wang, P. Mianjy, and R. Arora. “Robust learning for data poisoning attacks”. In: International Conference on Machine Learning. PMLR. 2021, 10859–10869.

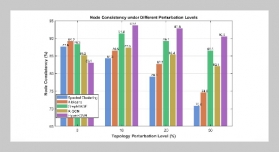

- [22] V. Tolpegin, S. Truex, M. E. Gursoy, and L. Liu. “Data poisoning attacks against federated learning systems”. In: European Symposium on Research in Computer Security. Springer. 2020, 480–501. DOI: doi.org/10.1007/978-3-030-58951-6_24.

- [23] M. Fang, X. Cao, J. Jia, and N. Gong. “Local model poisoning attacks to {Byzantine-Robust} federated learning”. In: 29th USENIX Security Symposium (USENIX Security 20). 2020, 1605–1622.

- [24] C. Fung, C. J. Yoon, and I. Beschastnikh, (2018) “Mitigating sybils in federated learning poisoning" arXiv preprint arXiv:1808.04866: DOI: https://doi.org/10.48550/arXiv.1808.04866.

- [25] A. N. Bhagoji, S. Chakraborty, P. Mittal, and S. Calo. “Model poisoning attacks in federated learning”. In: Proc. Workshop Secur. Mach. Learn.(SecML) 32nd Conf. Neural Inf. Process. Syst.(NeurIPS). 2018, 1–23.

- [26] Z. Chen, P. Tian, W. Liao, and W. Yu, (2021) “Towards multi-party targeted model poisoning attacks against federated learning systems" High-Confidence Computing 1(1): 100002. DOI: doi.org/10.1016/j.hcc.2021.100002..

- [27] C. Zhou, Y. Sun, D.Wang, and Q. Gao, (2022) “Fed-Fi: Federated Learning Malicious Model Detection Method Based on Feature Importance" Security and Communication Networks 2022: DOI: doi.org/10.1155/2022/7268347.

- [28] D. Cao, S. Chang, Z. Lin, G. Liu, and D. Sun. “Understanding distributed poisoning attack in federated learning”. In: 2019 IEEE 25th International Conference on Parallel and Distributed Systems (ICPADS). IEEE. 2019, 233–239.

- [29] H. Hosseini, H. Park, S. Yun, C. Louizos, J. Soriaga, and M. Welling. “Federated Learning of User Verification Models Without Sharing Embeddings”. In: International Conference on Machine Learning. PMLR. 2021, 4328–4336.

- [30] X. Li, Z. Qu, S. Zhao, B. Tang, Z. Lu, and Y. Liu, (2021) “Lomar: A local defense against poisoning attack on federated learning" IEEE Transactions on Dependable and Secure Computing: DOI: doi.org/10.1109/TDSC.2021.3135422.

- [31] A. Panda, S. Mahloujifar, A. N. Bhagoji, S. Chakraborty, and P. Mittal. “SparseFed: Mitigating Model Poisoning Attacks in Federated Learning with Sparsification”. In: International Conference on Artificial Intelligence and Statistics. PMLR. 2022, 7587–7624.

- [32] X. Cao and N. Z. Gong. “MPAF: Model Poisoning Attacks to Federated Learning based on Fake Clients”. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022, 3396–3404. DOI: doi.org/10.48550/arXiv.2203.08669..

- [33] A. Shamis, P. Pietzuch, M. Castro, E. Ashton, A. Chamayou, S. Clebsch, A. Delignat-Lavaud, C. Fournet, M. Kerner, J. Maffre, et al., (2021) “PAC: Practical accountability for CCF" arXiv preprint arXiv:2105.13116: DOI: doi.org/10.48550/arXiv.2105.13116.

- [34] A. Moghimi, G. Irazoqui, and T. Eisenbarth. “Cachezoom: How SGX amplifies the power of cache attacks”. In: International Conference on Cryptographic Hardware and Embedded Systems. Springer. 2017, 69–90.

- [35] H. Fereidooni, S. Marchal, M. Miettinen, A. Mirhoseini, H. Möllering, T. D. Nguyen, P. Rieger, A.-R. Sadeghi, T. Schneider, H. Yalame, et al. “SAFELearn: secure aggregation for private federated learning”. In: 2021 IEEE Security and Privacy Workshops (SPW). IEEE. 2021, 56–62. DOI: doi.org/10.1109/SPW53761.2021.00017..

- [36] W. Huang, T. Li, D. Wang, S. Du, J. Zhang, and T. Huang, (2022) “Fairness and accuracy in horizontal federated learning" Information Sciences 589: 170–185. DOI: doi.org/10.1016/j.ins.2021.12.102.

- [37] B. McMahan, E. Moore, D. Ramage, S. Hampson, and B. A. y Arcas. “Communication-efficient learning of deep networks from decentralized data”. In: Artificial intelligence and statistics. PMLR. 2017, 1273–1282.

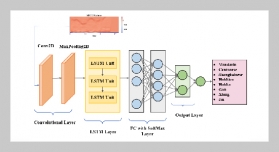

- [38] S. M. S. A. Abdullah, S. Y. A. Ameen, M. A. Sadeeq, and S. Zeebaree, (2021) “Multimodal emotion recognition using deep learning" Journal of Applied Science and Technology Trends 2(02): 52–58. DOI: doi.org/10.38094/jastt20291.