Jiazheng Sun, Weiguang LiThis email address is being protected from spambots. You need JavaScript enabled to view it. School of Mechanical and Automotive Engineering, South China University of Technology Guangzhou, Guangdong, China

Received:

February 27, 2022

Copyright The Author(s). This is an open access article distributed under the terms of the Creative Commons Attribution License (CC BY 4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are cited.

Accepted:

April 6, 2023

Publication Date:

July 5, 2023

Download Citation:

||https://doi.org/10.6180/jase.202402_27(2).0011

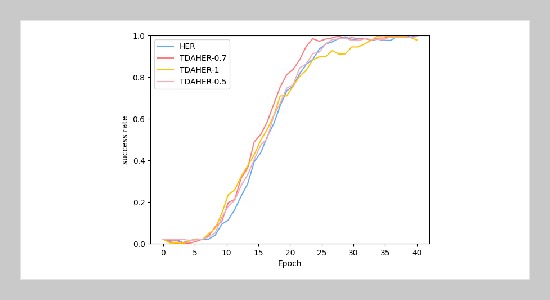

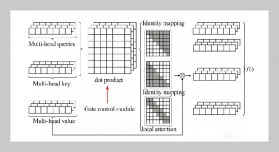

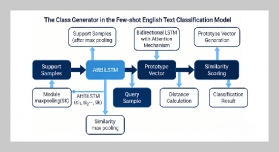

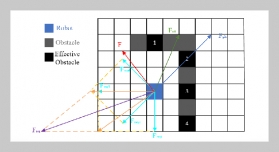

Motion control of robots is a high-dimensional, nonlinear control problem that is often difficult to handle using traditional dynamical path planning means. Reinforcement learning is currently an effective means to solve robot motion control problems, but reinforcement learning has disadvantages such as high number of trials and errors and sparse rewards, which restrict the application efficiency of reinforcement learning. The Hindsight Experience Replay(HER) algorithm is a reinforcement learning algorithm that solves the reward sparsity problem by constructing virtual target values. However, the HER algorithm still suffers from the problem of long time in the early stage of training, and there is still room for improving its sample utilization efficiency. Augmentation by existing data to improve training efficiency has been widely used in supervised learning, but is less applied in the field of reinforcement learning. In this paper, we propose the Hindsight Experience Replay with Transformed Data Augmentation (TDAHER) algorithm by constructing a transformed data augmentation method for reinforcement learning samples, combined with the HER algorithm. And in order to solve the problem of the accuracy of the augmented samples in the later stage of training, the decaying participation factor method is introduced. After the comparison of four simulated robot control tasks, it is proved that the algorithm can effectively improve the training efficiency of reinforcement learning.

Keywords:

Reinforcement learning; Machine learning; Motion control; Data augmentation; component;