- [1] V. Chandola, A. Banerjee, and V. Kumar, (2009) “Anomaly detection: A survey" ACM Computing Surveys 41(3): DOI: 10.1145/1541880.1541882.

- [2] Y. Wang and Y. Li, (2021) “Outlier detection based on weighted neighbourhood information network for mixedvalued datasets" Information Sciences 564: 396–415. DOI: 10.1016/j.ins.2021.02.045.

- [3] H.-P. Kriegel, P. Kröger, and A. Zimek, (2010) “Outlier detection techniques" Tutorial at KDD 10: 1–76.

- [4] H. Wang, M. J. Bah, and M. Hammad, (2019) “Progress in Outlier Detection Techniques: A Survey" IEEE Access 7: 107964–108000. DOI: 10.1109/ACCESS.2019.2932769.

- [5] C. C. Aggarwal. “Supervised outlier detection”. In: Outlier Analysis. Springer, 2017, 219–248.

- [6] B. Diallo, J. Hu, T. Li, G. A. Khan, X. Liang, and Y. Zhao, (2021) “Deep embedding clustering based on contractive autoencoder" Neurocomputing 433: 96–107. DOI: 10.1016/j.neucom.2020.12.094.

- [7] J. Wang, W. Yuan, and D. Cheng, (2015) “Hybrid genetic-particle swarm algorithm: AN efficient method for fast optimization of atomic clusters" Computational and Theoretical Chemistry 1059: 12–17. DOI: 10.1016/j.comptc.2015.02.003.

- [8] M. N. Ab Wahab, S. Nefti-Meziani, and A. Atyabi, (2015) “A comprehensive review of swarm optimization algorithms" PLoS ONE 10(5): DOI: 10.1371/journal.pone.0122827.

- [9] G. A. Khan, J. Hu, T. Li, B. Diallo, and Y. Zhao, (2022) “Multi-view low rank sparse representation method for three-way clustering" International Journal of Machine Learning and Cybernetics 13(1): 233–253. DOI: 10.1007/s13042-021-01394-6.

- [10] T. Nakane, N. Bold, H. Sun, X. Lu, T. Akashi, and C.Zhang, (2020) “Application of evolutionary and swarm optimization in computer vision: a literature survey" IPSJ Transactions on Computer Vision and Applications 12(1): DOI: 10.1186/s41074-020-00065-9.

- [11] J. Kennedy and R. Eberhart. “Particle swarm optimization”. In: Proceedings of ICNN’95-international conference on neural networks. 4. IEEE. 1995, 1942–1948.

- [12] R. O. Ogundokun, J. B. Awotunde, P. Sadiku, E. A. Adeniyi, M. Abiodun, and O. I. Dauda. “An Enhanced Intrusion Detection System using Particle Swarm Optimization Feature Extraction Technique”. In: 193. Cited by: 10; All Open Access, Gold Open Access.2021, 504–512. DOI: 10.1016/j.procs.2021.10.052.

- [13] S. Rana, S. Jasola, and R. Kumar, (2011) “A review on particle swarm optimization algorithms and their applications to data clustering" Artificial Intelligence Review 35(3): 211–222.

- [14] C. Guan, K. K. F. Yuen, and F. Coenen, (2019) “Particle swarm Optimized Density-based Clustering and Classification: Supervised and unsupervised learning approaches" Swarm and Evolutionary Computation 44: 876–896. DOI: 10.1016/j.swevo.2018.09.008.

- [15] Y. Liu, Z. Li, H. Xiong, X. Gao, and J. Wu. “Understanding of internal clustering validation measures”. In: Cited by: 632. 2010, 911–916. DOI: 10.1109/ICDM.2010.35.

- [16] M.-D. Yang, Y.-F. Yang, T.-C. Su, and K.-S. Huang, (2014) “An efficient fitness function in genetic algorithm classifier for landuse recognition on satellite images" The Scientific World Journal 2014: DOI: 10.1155/2014/264512.

- [17] D. L. Davies and D. W. Bouldin, (1979) “A Cluster Separation Measure" IEEE Transactions on Pattern Analysis and Machine Intelligence PAMI-1(2): 224–227. DOI: 10.1109/TPAMI.1979.4766909.

- [18] M.-D. Yang, Y.-F. Yang, T.-C. Su, and K.-S. Huang, (2014) “An efficient fitness function in genetic algorithm classifier for landuse recognition on satellite images" The Scientific World Journal 2014: DOI: 10.1155/2014/264512.

- [19] A. Asma and B. Sadok. “PSO-based dynamic distributed algorithm for automatic task clustering in a robotic swarm”. In: 159. Cited by: 10; All Open Access, Gold Open Access. 2019, 1103–1112. DOI: 10.1016/j.procs.2019.09.279.

- [20] L. Dey and S. Chakraborty, (2014) “Canonical pso based-means clustering approach for real datasets" International scholarly research notices 2014:

- [21] K. Babaei, Z. Chen, and T. Maul, (2019) “Detecting point outliers using prune-based outlier factor (plof)" arXiv preprint arXiv:1911.01654:

- [22] A. G. Gad, (2022) “Particle Swarm Optimization Algorithm and Its Applications: A Systematic Review" Archives of Computational Methods in Engineering 29(5): 2531–2561. DOI: 10.1007/s11831-021-09694-4.

- [23] S. Alam, G. Dobbie, Y. S. Koh, P. Riddle, and S. Ur Rehman, (2014) “Research on particle swarm optimization based clustering: A systematic review of literature and techniques" Swarm and Evolutionary Computation 17: 1–13. DOI: 10.1016/j.swevo.2014.02.001.

- [24] A. A. A. Esmin, R. A. Coelho, and S. Matwin, (2015) “A review on particle swarm optimization algorithm and its variants to clustering high-dimensional data" Artificial Intelligence Review 44(1): 23–45. DOI: 10.1007/s10462-013-9400-4.

- [25] E. O. Merza and N. J. Al-Anber. “A Suggested method for detecting outliers based on a particle swarm optimization algorithm”. In: 1897. 1. Cited by:0; All Open Access, Bronze Open Access. 2021. DOI: 10.1088/1742-6596/1897/1/012021.

- [26] S. M. H. Bamakan, B. Amiri, M. Mirzabagheri, and Y.Shi. “A New intrusion detection approach using PSO based multiple criteria linear programming”. In: 55. Cited by: 49; All Open Access, Bronze Open Access.2015, 231–237. DOI: 10.1016/j.procs.2015.07.040.

- [27] K.-W. Wang and S.-J. Qin. “A hybrid approach for anomaly detection using K-means and PSO”. In: 2nd International Conference on Electronics, Network and Computer Engineering (ICENCE 2016). Atlantis Press.2016, 821–826.

- [28] A.Wahid and A. C. S. Rao, (2019) “A Distance-Based Outlier Detection Using Particle Swarm Optimization Technique" Lecture Notes in Networks and Systems 40: 633–643. DOI: 10.1007/978-981-13-0586-3_62.

- [29] L. Guo, (2020) “Research on anomaly detection in massive multimedia data transmission network based on improved PSO algorithm" IEEE Access 8: 95368–95377. DOI: 10.1109/ACCESS.2020.2994578.

- [30] A. Karami and M. Guerrero-Zapata, (2015) “A fuzzy anomaly detection system based on hybrid PSO-Kmeans algorithm in content-centric networks" Neurocomputing 149(PC): 1253–1269. DOI: 10.1016/j.neucom.2014.08.070.

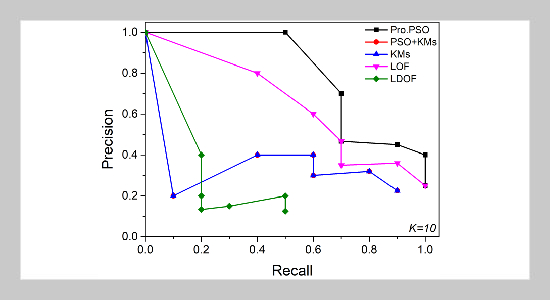

- [31] R. M. Alguliyev, R. M. Aliguliyev, and F. J. Abdullayeva, (2019) “PSO+K-means algorithm for anomaly detection in big data" Statistics, Optimization and Information Computing 7(2): 348–359. DOI: 10.19139/soic.v7i2.623.

- [32] M. Lotfi Shahreza, D. Moazzami, B. Moshiri, and M. Delavar, (2011) “Anomaly detection using a selforganizing map and particle swarm optimization" Scientia Iranica 18(6): 1460–1468. DOI: 10.1016/j.scient.2011.08.025.

- [33] A. Mekhmoukh and K. Mokrani, (2015) “Improved Fuzzy C-Means based Particle Swarm Optimization (PSO) initialization and outlier rejection with level set methods for MR brain image segmentation" Computer Methods and Programs in Biomedicine 122(2): 266–281. DOI: 10.1016/j.cmpb.2015.08.001.

- [34] A. A. d. M. Meneses, M. D. Machado, and R. Schirru, (2009) “Particle Swarm Optimization applied to the nuclear reload problem of a Pressurized Water Reactor" Progress in Nuclear Energy 51(2): 319–326. DOI: 10.1016/j.pnucene.2008.07.002.

- [35] Y. Zhang, S. Wang, and G. Ji, (2015) “A Comprehensive Survey on Particle Swarm Optimization Algorithm and Its Applications" Mathematical Problems in Engineering 2015: DOI: 10.1155/2015/931256.

- [36] X. Tao, X. Li, W. Chen, T. Liang, Y. Li, J. Guo, and L. Qi, (2021) “Self-Adaptive two roles hybrid learning strategies-based particle swarm optimization" Information Sciences 578: 457–481. DOI: 10.1016/j.ins.2021.07.008.

- [37] Z.-G. Liu, X.-H. Ji, Y. Yang, and H.-T. Cheng, (2021) “Multi-technique diversity-based particle-swarm optimization" Information Sciences 577: 298–323. DOI: 10.1016/j.ins.2021.07.006.

- [38] D. Van Der Merwe and A. Engelbrecht. “Data clustering using particle swarm optimization”. In: 1. Cited by: 657. 2003, 215–220. DOI: 10.1109/CEC.2003.1299577.

- [39] B. Xue, M. Zhang, andW. N. Browne, (2013) “Particle swarm optimization for feature selection in classification: A multi-objective approach" IEEE Transactions on Cybernetics 43(6): 1656–1671. DOI: 10.1109/TSMCB.2012.2227469.

- [40] R. Jamous, H. ALRahhal, and M. El-Darieby, (2021) “A new ann-particle swarm optimization with center of gravity (ann-psocog) prediction model for the stock market under the effect of covid-19" Scientific Programming 2021:

- [41] S. Sarkar, A. Roy, and B. S. Purkayastha, (2013) “Application of particle swarm optimization in data clustering: A survey" International Journal of Computer Applications 65(25):

- [42] L. Zajmi, F. Y. Ahmed, and A. A. Jaharadak, (2018) “Concepts, Methods, and Performances of Particle Swarm Optimization, Backpropagation, and Neural Networks" Applied Computational Intelligence and Soft Computing 2018: DOI: 10.1155/2018/9547212.

- [43] J. C. Bansal, P. K. Singh, and N. R. Pal. Evolutionary and swarm intelligence algorithms. 779. Springer, 2019.

- [44] Mostapha Kalami Heris Evolutionary Data Clustering in MATLAB. ttps : / / yarpiz . com / 64 / ypml101 - evolutionary-clustering. accessed June 2021.

- [45] S. Zhang, X. Li, M. Zong, X. Zhu, and R.Wang, (2018) “Efficient kNN classification with different numbers of nearest neighbors" IEEE Transactions on Neural Networks and Learning Systems 29(5): 1774–1785. DOI: 10.1109/TNNLS.2017.2673241.

- [46] R. Pamula, J. K. Deka, and S. Nandi. “An outlier detection method based on clustering”. In: Cited by: 55. 2011, 253–256. DOI: 10.1109/EAIT.2011.25.

- [47] D. L. Davies and D. W. Bouldin, (1979) “A Cluster Separation Measure" IEEE Transactions on Pattern Analysis and Machine Intelligence PAMI-1(2): 224–227. DOI: 10.1109/TPAMI.1979.4766909.

- [48] P. Cortez and A. de Jesus Raimundo Morais. A data mining approach to predict forest fires using meteorological data. http://www3.dsi.uminho.pt/pcortez/fires.pdf. 2007.

- [49] D. Dua and C. Graff. Ionosphere Dataset,UCI Machine Learning Repository, University of California, Irvine, School of Information and Computer Sciences. http://archive.ics.uci.edu/ml. 2017.

- [50] S. Rayana and L. Akoglu, (2016) “Less is more: Building selective anomaly ensembles" ACM Transactions on Knowledge Discovery from Data 10(4): DOI: 10.1145/2890508.

- [51] N. Fachada, M. A. Figueiredo, V. V. Lopes, R. C. Martins, and A. C. Rosa, (2014) “Spectrometric differentiation of yeast strains using minimum volume increase and minimum direction change clustering criteria" Pattern

Recognition Letters 45(1): 55–61. DOI: 10.1016/j.patrec.2014.03.008.

- [52] J. Kools. 6 functions for generating artificial datasets. https : / /www. mathworks . com / matlabcentral /fileexchange/41459 - 6- functions - for - generating -artificial-datasets. accessed on June 2021. 2021.

- [53] G. K. Patel, V. K. Dabhi, and H. B. Prajapati, (2017) “Clustering Using a Combination of Particle Swarm Optimization and K-means" Journal of Intelligent Systems 26(3): 457–469. DOI: 10.1515/jisys-2015-0099.

- [54] T. Kanungo, D. M. Mount, N. S. Netanyahu, C. D. Piatko, R. Silverman, and A. Y. Wu, (2002) “An efficient k-means clustering algorithms: Analysis and implementation" IEEE Transactions on Pattern Analysis and Machine Intelligence 24(7): 881–892. DOI: 10.1109/TPAMI.2002.1017616.

- [55] M. M. Breuniq, H.-P. Kriegel, R. T. Ng, and J. Sander, (2000) “LOF: Identifying density-based local outliers" SIGMOD Record (ACM Special Interest Group on Management of Data) 29(2): 93–104. DOI: 10.1145/335191.335388.

- [56] K. Zhang, M. Hutter, and H. Jin, (2009) “A new local distance-based outlier detection approach for scattered realworld data" Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence

and Lecture Notes in Bioinformatics) 5476 LNAI: 813–822. DOI: 10.1007/978-3-642-01307-2_84.

- [57] T. Kanungo, D. M. Mount, N. S. Netanyahu, C. D. Piatko, R. Silverman, and A. Y. Wu, (2002) “An efficient k-means clustering algorithm: Analysis and implementation" IEEE transactions on pattern analysis and machine intelligence 24(7): 881–892.

- [58] A. Asuncion and D. Newman. Forest Fire Dataset, UCI Machine Learning Repository, University of California, Irvine, School of Information and Computer Sciences. http://archive.ics.uci.edu/ml. 2007.

- [59] W. H. Wolberg, W. N. Street, and O. L. Mangasarian. Breast cancer Wisconsin (diagnostic) data set, UCI Machine Learning Repository, University of California, Irvine, School of Information and Computer Science. http://archive.ics.uci.edu/ml. 1992.

- [60] K. Nakai and M. Kanehisa, (1991) “Expert system for predicting protein localization sites in gram-negative bacteria" Proteins: Structure, Function, and Bioinformatics 11(2): 95–110.

- [61] K. Nakai and M. Kanehisa, (1992) “A knowledge base for predicting protein localization sites in eukaryotic cells" Genomics 14(4): 897–911. DOI: 10.1016/S0888-7543(05)80111-9.

- [62] C. C. Aggarwal and S. Sathe, (2015) “Theoretical foundations and algorithms for outlier ensembles" Acm sigkdd explorations newsletter 17(1): 24–47.

- [63] J. Wu and J. Wu, (2012) “Cluster analysis and K-means clustering: an introduction" Advances in K-Means clustering: A data mining thinking: 1–16.

- [64] G. Gan, C. Ma, and J. Wu. Data clustering: theory, algorithms, and applications. SIAM, 2020.