- [1] V. François-Lavet, P. Henderson, R. Islam, M. G. Belle-mare, and J. Pineau, (2018) “An introduction to deep reinforcement learning" Foundations and Trends in Machine Learning 11(3-4): 219–354. DOI: 10.1561/ 2200000071.

- [2] L. Busoniu, R. Babuska, B. De Schutter, and D. Ernst. Reinforcement learning and dynamic programming using function approximators. CRC press, 2017.

- [3] J. Schrittwieser, I. Antonoglou, T. Hubert, K. Si-monyan, L. Sifre, S. Schmitt, A. Guez, E. Lockhart, D. Hassabis, T. Graepel, T. Lillicrap, and D. Silver, (2020) “Mastering Atari, Go, chess and shogi by planning with a learned model" Nature 588(7839): 604–609. DOI: 10.1038/s41586-020-03051-4.

- [4] D. Silver, J. Schrittwieser, K. Simonyan, I. Antonoglou, A. Huang, A. Guez, T. Hubert, L. Baker, M. Lai, A. Bolton, Y. Chen, T. Lillicrap, F. Hui, L. Sifre, G. Van Den Driessche, T. Graepel, and D. Hassabis, (2017) “Mastering the game of Go without human knowledge" Nature 550(7676): 354–359. DOI: 10.1038/nature24270.

- [5] D. Silver, T. Hubert, J. Schrittwieser, I. Antonoglou, M. Lai, A. Guez, M. Lanctot, L. Sifre, D. Kumaran, T. Graepel, T. Lillicrap, K. Simonyan, and D. Hassabis, (2018) “A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play" Science 362(6419): 1140–1144. DOI: 10.1126/science.aar6404.

- [6] C. Berner, G. Brockman, B. Chan, V. Cheung, P. D˛ebiak, C. Dennison, D. Farhi, Q. Fischer, S. Hashme, C. Hesse, et al., (2019) “Dota 2 with large scale deep rein-forcement learning" arXiv preprint arXiv:1912.06680:

- [7] V. Mnih, K. Kavukcuoglu, D. Silver, A. A. Rusu, J. Veness, M. G. Bellemare, A. Graves, M. Riedmiller, A. K. Fidjeland, G. Ostrovski, S. Petersen, C. Beattie, A. Sadik, I. Antonoglou, H. King, D. Kumaran, D. Wierstra, S. Legg, and D. Hassabis, (2015) “Human-level control through deep reinforcement learning" Na-ture 518(7540): 529–533. DOI: 10.1038/nature14236.

- [8] O. Vinyals, I. Babuschkin, W. M. Czarnecki, M. Math-ieu, A. Dudzik, J. Chung, D. H. Choi, R. Powell, T. Ewalds, P. Georgiev, J. Oh, D. Horgan, M. Kroiss, I. Danihelka, A. Huang, L. Sifre, T. Cai, J. P. Agapiou, M. Jaderberg, A. S. Vezhnevets, R. Leblond, T. Pohlen, V. Dalibard, D. Budden, Y. Sulsky, J. Molloy, T. L. Paine, C. Gulcehre, Z. Wang, T. Pfaff, Y. Wu, R. Ring, D. Yogatama, D. Wünsch, K. McKinney, O. Smith, T. Schaul, T. Lillicrap, K. Kavukcuoglu, D. Hassabis, C. Apps, and D. Silver, (2019) “Grandmaster level in StarCraft II using multi-agent reinforcement learning" Nature 575(7782): 350–354. DOI: 10.1038/s41586-019-1724-z.

- [9] S. Levine, P. Pastor, A. Krizhevsky, J. Ibarz, and D. Quillen, (2018) “Learning hand-eye coordi-nation for robotic grasping with deep learning and large-scale data collection" International Journal of Robotics Research 37(4-5): 421–436. DOI: 10.1177/0278364917710318.

- [10] O. M. Andrychowicz, B. Baker, M. Chociej, R. Józe-fowicz, B. McGrew, J. Pachocki, A. Petron, M. Plap-pert, G. Powell, A. Ray, J. Schneider, S. Sidor, J. Tobin, P. Welinder, L. Weng, and W. Zaremba, (2020) “Learn-ing dexterous in-hand manipulation" International Journal of Robotics Research 39(1): 3–20. DOI: 10.1177/0278364919887447.

- [11] S. Gu, E. Holly, T. Lillicrap, and S. Levine. “Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates”. In: Cited by: 777; All Open Access, Green Open Access. 2017, 3389– 3396. DOI: 10.1109/ICRA.2017.7989385.

- [12] M. Riedmiller, R. Hafner, T. Lampe, M. Neunert, J. Degrave, T. Van De Wiele, V. Mnih, N. Heess, and T. Springenberg. “Learning by playing - Solving sparse reward tasks from scratch”. In: 10. Cited by: 45. 2018, 6910–6919.

- [13] J. Xie, Z. Shao, Y. Li, Y. Guan, and J. Tan, (2019) “Deep Reinforcement Learning with Optimized Reward Func-tions for Robotic Trajectory Planning" IEEE Access 7: 105669–105679. DOI: 10.1109/ACCESS.2019.2932257.

- [14] M. Plappert, M. Andrychowicz, A. Ray, B. McGrew, B. Baker, G. Powell, J. Schneider, J. Tobin, M. Chociej, P. Welinder, et al., (2018) “Multi-goal reinforcement learning: Challenging robotics environments and request for research" arXiv preprint arXiv:1802.09464:

- [15] J. Tarbouriech, E. Garcelon, M. Valko, M. Pirotta, and A. Lazaric. “No-regret exploration in goal-oriented reinforcement learning”. In: PartF168147-13. Cited by: 5. 2020, 9370–9379.

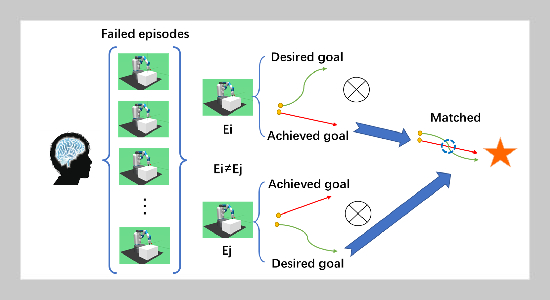

- [16] M. Andrychowicz, F. Wolski, A. Ray, J. Schneider, R. Fong, P. Welinder, B. McGrew, J. Tobin, P. Abbeel, and W. Zaremba. “Hindsight experience replay”. In: 2017-December. Cited by: 616. 2017, 5049–5059.

- [17] R. Zhao and V. Tresp. “Energy-based hindsight expe-rience prioritization”. In: Conference on Robot Learning. PMLR. 2018, 113–122.

- [18] B. Manela and A. Biess, (2021) “Bias-reduced hindsight experience replay with virtual goal prioritization" Neu-rocomputing 451: 305–315. DOI: 10.1016/j.neucom. 2021.02.090.

- [19] S. Lanka and T. Wu, (2018) “Archer: Aggressive rewards to counter bias in hindsight experience replay" arXiv preprint arXiv:1809.02070:

- [20] C. Bai, L. Wang, Y. Wang, Z. Wang, R. Zhao, C. Bai, and P. Liu, (2023) “Addressing Hindsight Bias in Multi-goal Reinforcement Learning" IEEE Transactions on Cybernetics 53(1): 392–405. DOI: 10.1109/TCYB.2021. 3107202.

- [21] M. Fang, T. Zhou, Y. Du, L. Han, and Z. Zhang. “Curriculum-guided hindsight experience replay”.In: 32. Cited by: 43. 2019.

- [22] M. L. Puterman. Markov decision processes: discrete stochastic dynamic programming. John Wiley & Sons, 2014.

- [23] Y. Wen, J. Si, A. Brandt, X. Gao, and H. H. Huang, (2020) “Online Reinforcement Learning Control for the Personalization of a Robotic Knee Prosthesis" IEEE Trans-actions on Cybernetics 50(6): 2346-2356.DOI: 10.1109/TCYB.2019.2890974.

- [24] S. B. Niku. Introduction to robotics: analysis, control, applications. John Wiley & Sons, 2020.

- [25] X. Wang, L. Ke, Z. Qiao, and X. Chai, (2021) “Large-Scale Traffic Signal Control Using a Novel Multiagent Reinforcement Learning" IEEE Transactions on Cy-bernetics 51(1): 174–187. DOI: 10.1109/TCYB.2020.3015811.

- [26] Y.-C. Liu and C.-Y. Huang, (2022) “DDPG-Based Adap-tive Robust Tracking Control for Aerial Manipulators With Decoupling Approach" IEEE Transactions on Cy-bernetics 52(8): 8258–8271. DOI: 10.1109/TCYB.2021. 3049555.

- [27] M. Patacchiola and A. Cangelosi, (2022) “A Develop-mental Cognitive Architecture for Trust and Theory of Mind in Humanoid Robots" IEEE Transactions on Cy-bernetics 52(3): 1947–1959. DOI: 10.1109/TCYB.2020. 3002892.

- [28] T. Schaul, D. Horgan, K. Gregor, and D. Silver. “Uni-versal value function approximators”. In: 2. Cited by: 364. 2015, 1312–1320.

- [29] V. Pong, S. Gu, M. Dalal, and S. Levine, (2018) “Tem-poral difference models: Model-free deep rl for model-based control" arXiv preprint arXiv:1802.09081:

- [30] S. Zhang and R. S. Sutton, (2017) “A deeper look at experience replay" arXiv preprint arXiv:1712.01275:

- [31] V. Mnih, K. Kavukcuoglu, D. Silver, A. Graves, I. Antonoglou, D. Wierstra, and M. Riedmiller, (2013) “Playing atari with deep reinforcement learning" arXiv preprint arXiv:1312.5602:

- [32] T. P. Lillicrap, J. J. Hunt, A. Pritzel, N. Heess, T. Erez, Y. Tassa, D. Silver, and D. Wierstra, (2015) “Contin-uous control with deep reinforcement learning" arXiv preprint arXiv:1509.02971:

- [33] M. Fang, C. Zhou, B. Shi, B. Gong, J. Xu, and T. Zhang. “Dher: Hindsight experience replay for dy-namic goals”. In: Cited by: 21. 2019.

- [34] L. F. Vecchietti, M. Seo, and D. Har, (2022) “Sampling Rate Decay in Hindsight Experience Replay for Robot Con-trol" IEEE Transactions on Cybernetics 52(3): 1515– 1526. DOI: 10.1109/TCYB.2020.2990722.

- [35] P. Rauber, A. Ummadisingu, F. Mutz, and J. Schmid-huber, (2017) “Hindsight policy gradients" arXiv preprint arXiv:1711.06006:

- [36] A. Nair, V. Pong, M. Dalal, S. Bahl, S. Lin, and S. Levine. “Visual reinforcement learning with imag-ined goals”. In: 2018-December. Cited by: 137. 2018, 9191–9200.

- [37] D. P. Kingma and M. Welling, (2013) “Auto-encoding variational bayes" arXiv preprint arXiv:1312.6114:

- [38] C. Bai, L. Wang, L. Han, J. Hao, A. Garg, P. Liu, and Z. Wang. “Principled exploration via optimistic boot-strapping and backward induction”. In: International Conference on Machine Learning. PMLR. 2021, 577–587.

- [39] H. Liu, A. Trott, R. Socher, and C. Xiong, (2019) “Competitive experience replay" arXiv preprint arXiv:1902.00528:

- [40] R. Zhao, X. Sun, and V. Tresp. “Maximum entropyregularized multi-goal reinforcement learning”. In: 2019-June. Cited by: 8. 2019, 13022–13035.

- [41] C. Colas, P. Founder, O. Sigaud, M. Chetouani, and P.-Y. Oudeyer. “CURIOUS: Intrinsically motivated modular multi-goal reinforcement learning”. In: 2019-June. Cited by: 9. 2019, 2372–2387.

- [42] Y. Ding, C. Florensa, M. Phielipp, and P. Abbeel. “Goal-conditioned imitation learning”. In: 32. Cited by: 43. 2019.

- [43] J. Yang, A. Nakhaei, D. Isele, K. Fujimura, and H. Zha, (2018) “Cm3: Cooperative multi-goal multi-stage multi-agent reinforcement learning" arXiv preprint arXiv:1809.05188:

- [44] S. Nasiriany, V. H. Pong, S. Lin, and S. Levine. “Planning with goal-conditioned policies”. In: 32. Cited by: 38. 2019.

- [45] E. Todorov, T. Erez, and Y. Tassa. “MuJoCo: A physics engine for model-based control”. In: Cited by: 1643. 2012, 5026–5033. DOI: 10.1109/IROS.2012.6386109.