REFERENCES

- [1] Dhyani, J., Keong, W. and Bhowmick, S., A Survey of Web Metrics, ACM Computing Surveys (2002).

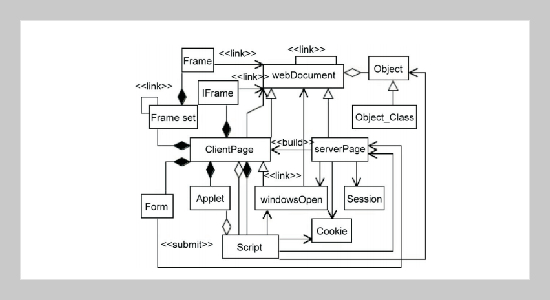

- [2] Conallen, J., Building Web Applications with UML, Addison-Wesley (2000).

- [3] Offutt, J., Wu, Y. and Du, X., Modeling and Testing of Dynamic Aspects of Web Applications, Technical Report, George Mason University, USA (2004).

- [4] Ricca, F. and Tonella, P., Building a Tool for the Analysis and Testing of Web Applications: Problems and Solutions, Tools and Algorithms for the Construction and Analysis of Systems (TACAS’200), Genova, Italy, April (2001).

- [5] Schwabe, D., Pontes, R. and Moura, I., OOHDM-Web: An Environment for Implementation of Hypermedia Applications in the WWW, SigWEB Newsletter, 8, June (1999).

- [6] Bellettini, C., Marchetto, A. and Trentini, A., “Dynamical Extraction of Web Applications Models via Mutation Analysis,” Journal of Information - An International Interdisciplinary Journal - Special Issue on Software Engineering, Vol. 8 (2005).

- [7] Briand, L., Morasca, S. and Basili, V., Defining and Validating High-Level Design Metrics, Computer Science Technical Report Series (1999).

- [8] Chidamber, S. and Kemerer, C., A Metrics Suite for Object Oriented Design, IEEE Transactions on Software Engieneering (1994).

- [9] Fenton, N. and Neil, M., Software Metrics: Roadmap, International Conference on Software Engineering (ICSE 2000), Limerick, Ireland, June (2000).

- [10] Botafogo, R., Rivlin, E. and Shneiderman, B., Structural Analysis of Hypertexts: Identifying Hierarchies and Useful Metrics, ACM Transaction Information System (1992).

- [11] Herder, E., Metrics for the Adaptation of Site Structure, German Workshop on Adaptivity and User Modeling in Interactive Systems (ABIS02) (2002).

- [12] Marinescu, R., Detecting Design Flaws via Metrics in Object-Oriented Systems, 39th Technology of ObjectOriented Languages and Systems (TOOLS USA 2001), Santa Barbara, CA, USA, July-August (2001).

- [13] Rosenberg, L., Hammer, T. and Shaw, J., Software Metrics and Reliability, 9th International Symposium on Software Reliability Engineering, Germany, November (1998).

- [14] Denaro, G., Morasca, S. and Pezzè, M., Deriving Models of Software Fault Proneness. 14th International Conference on Software Engineering and Knowledge Engineering (SEKE 2002), Ischia, Italy, July (2002).

- [15] Basili, V., Briand, L. and Melo, W., A Validation of Object-Oriented Design Metrics as Quality Indicators, IEEE Transaction on Software Engineering (1996).

- [16] Fenton, N. and Neil, M., A Critique of Software Defect Prediction Models. IEEE Trans. on Software Engineering, May-June (1999).

- [17] Systä, T., Understanding the Behavior of Java Program, 7th Working Conference on Reverse Engineering (WCRE 2000), Brisbane, Australia, November (2000).

- [18] Nageswaran, S., Test Effort Estimation Using Use Case Points, Quality Week 2001, San Francisco, California, USA, June (2001).

- [19] Shepperd, M., Schofield, C. and K. B., Effort Estimation Using Analogy, ICSE-18, Berlin (1996).

- [20] Shan, Y., McKay, R., Lokan, C. and Essam, D., Software Project Effort Estimation Using Genetic Programming, International Conference on Communications, Circuits and Systems (ICCCAS 2002), Chengdu, China, July (2002).

- [21] Boetticher, G., Using Machine Learning to Predict Project Effort: Empirical Case Studies in Data-Started Domains, First International Workshop on Modelbased Requirements Engineering, San Diego, USA (2001).

- [22] Durand, J. and Gaudoin, O., Software Reliability Modelling and Prediction with Hidden Markov Chain, INRIA-Rhone-Alpe Technical Report, February (2003).

- [23] Di Lucca, G. A. Fasolino, A. R., Pace, F., Tramontana, P. and De Carlini, U., WARE: A Tool for the Reverse Engineering of Web Applications, 6th European Conference on Software Maintenance and Reengineering (CSMR 2002), Budapest, Hungary, March (2002).

- [24] Basili, V., Caldiera, G. and Rombach, D., GQM Paradigm. Computer Encyclopedia of Software Engineering, John Wiley & Sons (1994).

- [25] Marchetto, A. and Trentini, A., Web Applications Testability trough Metrics and Analogies, Third International Conference on Information and Communication Technology (ICICT 2005), Egypt (2005).