REFERENCES

- [1] R. Jeen Retna Kumar, M. Sundaram, N. Arumugam, and V. Kavitha, (2021) “Face feature extraction for emotion recognition using statistical parameters from subband selective multilevel stationary biorthogonal wavelet transform" Soft Computing 25(7): 5483–5501. DOI: 10.1007/s00500-020-05550-y.

- [2] J. Kacur, B. Puterka, J. Pavlovicova, and M. Oravec, (2021) “On the speech properties and feature extraction methods in speech emotion recognition" Sensors 21(5): 1–27. DOI: 10.3390/s21051888.

- [3] Z. Peng, J. Dang, M. Unoki, and M. Akagi, (2021) “Multi-resolution modulation-filtered cochleagram feature for LSTM-based dimensional emotion recognition from speech" Neural Networks 140: 261–273. DOI: 10.1016/j.neunet.2021.03.027.

- [4] C. Guanghui and Z. Xiaoping, (2021) “Multi-Modal Emotion Recognition by Fusing Correlation Features of Speech-Visual" IEEE Signal Processing Letters 28: 533–537. DOI: 10.1109/LSP.2021.3055755.

- [5] W. Xiaohua, P. Muzi, P. Lijuan, H. Min, J. Chunhua, and R. Fuji, (2019) “Two-level attention with two-stage multi-task learning for facial emotion recognition" Journal of Visual Communication and Image Representation 62: 217–225. DOI: 10.1016/j.jvcir.2019.05.009.

- [6] Y. Kwak, K. Kong, W.-J. Song, B.-K. Min, and S.-E. Kim, (2020) “Multilevel Feature Fusion with 3D Convolutional Neural Network for EEG-Based Workload Estimation" IEEE Access 8: 16009–16021. DOI: 10.1109/aCCESS.2020.2966834.

- [7] H. Xu, H. Zhang, K. Han, Y. Wang, Y. Peng, and X. Li. “Learning alignment for multimodal emotion recognition from speech”. In: 2019-September. Cited by: 34; All Open Access, Green Open Access. 2019, 3569–3573. DOI: 10.21437/Interspeech.2019-3247.

- [8] H. Li, W. Ding, Z. Wu, and Z. Liu, (2020) “Learning Fine-Grained Cross Modality Excitement for Speech Emotion Recognition" arXiv preprint arXiv:2010.12733:

- [9] R. Basnet, M. T. Islam, T. Howlader, S. M. Rahman, and D. Hatzinakos. “Statistical Selection of CNN-based Audiovisual Features for Instantaneous Estimation of Human Emotional States”. In: 2018-January. Cited by: 3; All Open Access, Green Open Access. 2017, 50–54. DOI: 10.1109/ICTCS.2017.14.

- [10] A. Samareh, Y. Jin, Z.Wang, X. Chang, and S. Huang, (2018) “Detect depression from communication: how computer vision, signal processing, and sentiment analysis join forces" IISE Transactions on Healthcare Systems Engineering 8(3): 196–208. DOI: 10.1080/24725579.2018.1496494.

- [11] S. Poria, E. Cambria, N. Howard, G.-B. Huang, and A. Hussain, (2016) “Fusing audio, visual and textual clues for sentiment analysis from multimodal content" Neurocomputing 174: 50–59.

- [12] G. Castellano, I. Leite, A. Pereira, C. Martinho, A. Paiva, and P.W. McOwan, (2010) “Affect recognition for interactive companions: Challenges and design in real world scenarios" Journal on Multimodal User Interfaces 3(1): 89–98. DOI: 10.1007/s12193-009-0033-5.

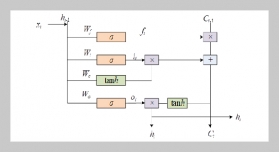

- [13] T. Chen, H. Yin, X. Yuan, Y. Gu, F. Ren, and X. Sun, (2021) “Emotion recognition based on fusion of long short term memory networks and SVMs" Digital Signal Processing: A Review Journal 117: DOI: 10.1016/j.dsp.2021.103153.

- [14] J. Zhang, X. Chen, A. Khan, Y.-k. Zhang, X. Kuang, X. Liang, M. L. Taccari, and J. Nuttall, (2021) “Daily runoff forecasting by deep recursive neural network" Journal of Hydrology 596: DOI: 10.1016/j.jhydrol.2021.126067.

- [15] M. M. Morgan, I. Bhattacharya, R. J. Radke, and J. Braasch, (2021) “Classifying the emotional speech content of participants in group meetings using convolutional long short-term memory network" Journal of the Acoustical Society of America 149(2): 885–894. DOI: 10.1121/10.0003433.

- [16] D. Huang, X. Feng, H. Zhang, Z. Yu, J. Peng, G. Zhao, and Z. Xia, (2021) “Spatio-Temporal Pain Estimation Network with Measuring Pseudo Heart Rate Gain" IEEE Transactions on Multimedia:

- [17] D. Huang, Z. Xia, J. Mwesigye, and X. Feng, (2020) “Pain-attentive network: a deep spatio-temporal attention model for pain estimation" Multimedia Tools and Applications 79(37-38): 28329–28354. DOI: 10.1007/s11042-020-09397-1.

- [18] M. Arora, S. Garg, and A. Srivani, (2021) “Face mask detection system using Mobilenetv2" International Journal of Engineering and Advanced Technology 10(4): 127–129.

- [19] C. Tan, M. Šarlija, and N. Kasabov, (2021) “NeuroSense: Short-term emotion recognition and understanding based on spiking neural network modelling of spatiotemporal EEG patterns" Neurocomputing 434: 137–148. DOI: 10.1016/j.neucom.2020.12.098.

- [20] K. Zaporojets, G. Bekoulis, J. Deleu, T. Demeester, and C. Develder, (2021) “Solving arithmetic word problems by scoring equations with recursive neural networks" Expert Systems with Applications 174: DOI: 10.1016/j.eswa.2021.114704.

- [21] A. Jisi and S. Yin, (2021) “A new feature fusion network for student behavior recognition in education" Journal of Applied Science and Engineering (Taiwan) 24(2): 133–140. DOI: 10.6180/jase.202104_24(2).0002.

- [22] H. Dong and S.-B. Tsai, (2021) “An Empirical Study on Application of Machine Learning and Neural Network in English Learning" Mathematical Problems in Engineering 2021: DOI: 10.1155/2021/8444858.

- [23] X. Ma, J. Guo, A. Sansom, M. McGuire, A. Kalaani, Q. Chen, S. Tang, Q. Yang, and S. Fu, (2021) “Spatial Pyramid Attention for Deep Convolutional Neural Networks" IEEE Transactions on Multimedia 23: 3048–3058. DOI: 10.1109/TMM.2021.3068576.

- [24] S.-H.Wang, S. Fernandes, Z. Zhu, and Y.-D. Zhang, (2021) “AVNC: Attention-based VGG-style network for COVID-19 diagnosis by CBAM" IEEE Sensors Journal: DOI: 10.1109/JSEN.2021.3062442.

- [25] D. Liu, L. Shan, L. Wang, S. Yin, H. Wang, and C. Wang, (2021) “P3OI-MELSH: Privacy Protection Target Point of Interest Recommendation Algorithm Based on Multi-Exploring Locality Sensitive Hashing" Frontiers in Neurorobotics 15: DOI: 10.3389/fnbot.2021.660304.

- [26] Y. DONG, H. SU, and B. e. a. LIU, (2021) “Model level fusion dimension emotion recognition method based on Transformer" Journal of Signal Processing 37(5): 885–892. DOI: 10.16798/j.issn.10030530.2021.05.023.