Wei Huang1,2,3, Zhen Zhang1,2,3, Wentao Li1,2 and Jiandong Tian This email address is being protected from spambots. You need JavaScript enabled to view it.1,2

1State Key Laboratory of Robotics, Shenyang Institute of Automation, Chinese Academy of Sciences, Shenyang 110016, P.R. China

2Institutes for Robotics and Intelligent Manufacturing, Chinese Academy of Sciences, Shenyang 110016, P.R. China

3University of Chinese Academy of Sciences, Beijing 100049, P.R. China

REFERENCES

- [1] Sugimoto, S., H. Tateda, H. Takahashi, and M. Okutomi (2004) Obstacle Detection Using Millimeter-wave Radar and Its Visualization on Image Sequence, International Conference on Pattern Recognition, Cambridge, England, Aug. 23�26, pp. 342�345. doi: 10. 1109/ICPR.2004.1334537

- [2] Sun, Z. H., G. Bebis, and R. Miller (2004) On-road Vehicle Detection Using Optical Sensors: a Review, 7th IEEE International Conference on Intelligent Transportation Systems, Washington, DC, U.S.A., 585�590. doi: 10.1109/ITSC.2004.1398966

- [3] Kim, D. Y., and M. Jeon (2014) Data Fusion of Radar and Image Measurements for Multi-object Tracking via Kalman Filtering, Information Sciences 278, 641� 652. doi: 10.1016/j.ins.2014.03.080

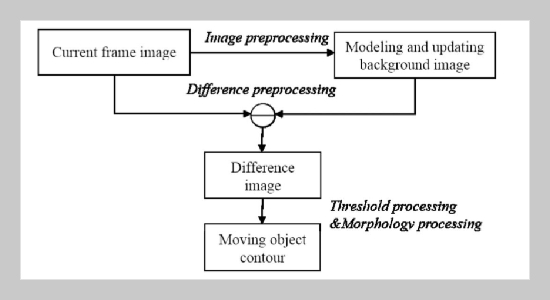

- [4] Piccardi, M. (2004) Background Subtraction Techniques: a Review, IEEE International Conference on Systems,ManandCybernetics,TheHague,Netherlands, Oct. 10�13, pp. 3099�3104. doi: 10.1109/ICSMC.2004. 1400815

- [5] Cristani, M., M. Farenzena, D. Bloisi, and V. Murino (2010) Background Subtraction for Automated Multisensor Surveillance: a Comprehensive Review, EURASIP Journal on Advances in Signal Processing 2010(43). doi: 10.1155/2010/343057

- [6] Bouwmans, T., F. El Baf, and B. Vachon (2010) Statistical Background Modeling for Foreground Detection: a Survey, Handbook of Pattern Recognition and Computer Vision 4th ed., 181�199.

- [7] Benezeth, Y., P. M. Jodoin, B. Emile, H. Laurent, and C. Rosenberger (2008) Review and Evaluation of Commonly-implementedBackground Subtraction Algorithms, 19th International Conference on Pattern Recognition, Tampa, Florida, USA, Dec. 8�11. doi: 10. 1109/ICPR.2008.4760998

- [8] Sobral, A., and A. Vacavant (2014) A Comprehensive Review of Background Subtraction Algorithms Evaluated with Synthetic and Real Videos, Computer Vision and Image Understanding 122, 4�21. doi: 10. 1016/j.cviu.2013.12.005

- [9] Obrvan, M., J. Cesic, and I. Petrovic (2016) Appearance Based Vehicle Detection by Radar-stereo Vision Integration, Learning Robotics forYoungsters-The RoboParty Experience 437�449.

- [10] Ji, Z. P., and D. Prokhorov (2008) Radar-vision Fusion for Object Classification, World Automation Congress 2008, Hawaii, USA, Sept. 28�Oct. 2, pp. 375� 380.

- [11] Wang, T., N. N. Zheng, J. M.Xin, and Z. Ma(2011) Integrating Millimeter Wave Radar with a Monocular Vision Sensor for On-road Obstacle Detection Applications,” Sensors 11(9), 8992�9008. doi: 10.3390/ s110908992

- [12] Sole, A., O. Mano, G. P. Stein, H. Kumon, Y. Tamatsu, and A. Shashua (2004) Solid or Not Solid: Vision for Radar Target Validation, IEEE Intelligent Vehicles Symposium, Parma, Italy, Jun 14�17, pp. 819�824.

- [13] Wu, S. G., S. Decker, P. Chang, T. Camus, and J. Eledath (2009) Collision Sensing by Stereo Vision and Radar Sensor Fusion, IEEE Transactions on Intelligent Transportation Systems 10(4), 606�614. doi: 10. 1109/TITS.2009.2032769

- [14] Alessandretti, G., A. Broggi, and P. Cerri (2007) Vehicle and Guard Rail Detection Using Radar and Vision Data Fusion, IEEE Transactions on Intelligent Transportation Systems 8(1), 95�104. doi: 10.1109/TITS. 2006.888597

- [15] Bombini, L., P. Cerri, P. Medici, and G. Alessandretti (2006) Radar-vision Fusion for Vehicle Detection, Proceedings of International Workshop on Intelligent Transportation, 65�70.

- [16] Chen, X. W. (2016) Study on Vehicle Detection Using Vision and Radar, Master thesis, Jilin University, Jilin, China.

- [17] Zhang, Z. Y. (2000) A Flexible New Technique for Camera Calibration, IEEE Transactions on Pattern Analysis and Machine Intelligence 22(11), 1330�1334. doi: 10.1109/34.888718