REFERENCES

- [1] Jo J., Lee SJ., Park KR., Kim I-J., Kim J., “Detecting driver drowsiness using feature-level fusion and user-specific classification,” Expert Systems with Applications, 41, No. 4, pp. 1139-1152 (2014). doi:10.1016/j.eswa.2013.07. 108

- [2] Cyganek B., Gruszczyński S., “Hybrid computer vision system for drivers' eye recognition and fatigue monitoring,” Neurocomputing, 126, pp. 78-94 (2014). doi: 10.1016/j.neucom.2013.01.048

- [3] Dasgupta A., George A., Happy S., Routray A., “A vision-based system for monitoring the loss of attention in automotive drivers,” IEEE Transactions on Intelligent Transportation Systems, 14, No. 4, pp. 1825-1838 (2013). doi: 10.1109/ TITS.2013.2271052

- [4] Zhao C., Lian J., Dang Q., Tong C., “Classification of driver fatigue expressions by combined curvelet features and gabor features, and random subspace ensembles of support vector machines,” Journal of Intelligent & Fuzzy Systems, 26, No. 1, pp. 91-100 (2014). doi: 10.3233/IFS-120717

- [5] Zhao C., Zhang Y., Zhang X., He J., “Recognition of driver’s fatigue expression using Local Multiresolution Derivative Pattern,” Journal of Intelligent & Fuzzy Systems, Vol. 30, No. 1, pp. 547-560 (2015). doi:10.3233/ifs-151779

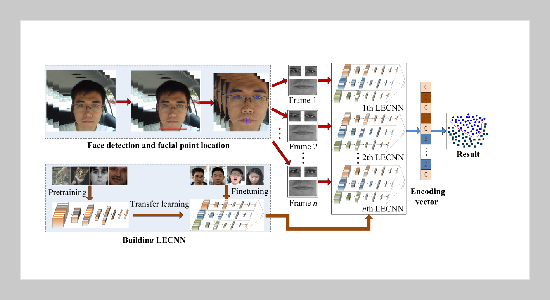

- [6] Wang C., Yan T., Jia H., “Spatial-Temporal Feature Representation Learning for Facial Fatigue Detection,” International Journal of Pattern Recognition & Artificial Intelligence, Vol. 32, No. 12, pp. 1856018 (2018). doi: 10.1142/ S0218001418560189

- [7] Jabbar R., Al-Khalifa K., Kharbeche M., Alhajyaseen W., Jafari M., Shan J., “Real-time Driver Drowsiness Detection for Android Application Using Deep Neural Networks Techniques,” Procedia Computer Science, Vol. 130, pp. 400-407 (2018). doi: 10.1016/j.procs.2018.04.060

- [8] Viola P., Jones MJ. “Robust real-time face detection,” International journal of computer vision, Vol. 57, No. 2 pp. 137-154 (2004). doi: 10.1023/b:visi.000001 3087.49260.fb

- [9] Asthana A., Zafeiriou S., Cheng S., Pantic M., “Incremental face alignment in the wild,” of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, U.S.A., June 24-27, pp. 1859-1866 (2014).

- [10] LeCun Y., Bottou L., Bengio Y., Haffner P. “Gradient-based learning applied to document recognition,” Proceedings of the IEEE, Vol. 86, No. 11, pp. 2278-2324 (1998). doi: 10.1109/5.726791

- [11] Lucey P., Cohn JF., Kanade T., Saragih J., Ambadar Z., Matthews I., “The extended cohn-kanade dataset (ck+): A complete dataset for action unit and emotion-specified expression,” of 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, U.S.A., June 13-18, pp. 94-101 (2010).

- [12] Pantic M., Valstar M., Rademaker R., Maat L., “Web-based database for facial expression analysis,” of 2005 IEEE international conference on multimedia and Expo, Amsterdam, Netherlands, July 06-06, (2005).

- [13] Bänziger T., Scherer KR., “Introducing the geneva multimodal emotion portrayal (gemep) corpus,” Blueprint for affective computing: A sourcebook, :Oxford University, Oxford, pp. 271-294 (2010).

- [14] Goodfellow IJ., Erhan D., Carrier PL., Courville A., Mirza M., Hamner B., Cukierski W., Tang Y., Thaler D., Lee D-H., “Challenges in representation learning: A report on three machine learning contests,” of 2013 International Conference on Neural Information Processing, Daegu, Korea, May 26-30, pp. 117-124 (2013).

- [15] Lyons MJ., Budynek J., Akamatsu S., “Automatic classification of single facial images,” IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 21, No. 12, pp. 1357-1362 (1999). doi: 10.1109/34.817413

- [16] Krizhevsky A. “Learning Multiple Layers of Features from Tiny Images,” Desertation, Univ Toronto, Toronto, ON, Canada, 2009.

- [17] Zhao L., Wang Z., Zhang G., “Facial expression recognition from video sequences based on spatial-temporal motion local binary pattern and gabor multiorientation fusion histogram,” Mathematical Problems in Engineering, Vol. 2017, pp. 1-12 (2013). doi: 10.1155/2017/7206041

- [18] Zhao L., Wang Z., Wang X., Qi Y., Liu Q., Zhang G., “Human fatigue expression recognition through image-based dynamic multi-information and bimodal deep learning,” Journal of Electronic Imaging, Vol. 25, No. 5, pp. 053024. doi: 1117/1.JEI.25.5.053024