- [1] P. Wang, B. Bayram, and E. Sertel, (2022) “A compre�hensive review on deep learning based remote sensing im�age super-resolution methods" Earth-Science Reviews 232: 104110.

- [2] S. Yin, (2023) “Object Detection Based on Deep Learning: A Brief Review" IJLAI Transactions on Science and Engineering 1(02): 1–6.

- [3] Y. Ye, T. Tang, B. Zhu, C. Yang, B. Li, and S. Hao, (2022) “A multiscale framework with unsupervised learn�ing for remote sensing image registration" IEEE Trans�actions on Geoscience and Remote Sensing 60: 1– 15.

- [4] R. Girshick, J. Donahue, T. Darrell, and J. Malik, (2015) “Region-based convolutional networks for accurate object detection and segmentation" IEEE transactions on pattern analysis and machine intelligence 38(1): 142–158.

- [5] R. Girshick. “Fast r-cnn”. In: Proceedings of the IEEE international conference on computer vision. 2015, 1440–1448.

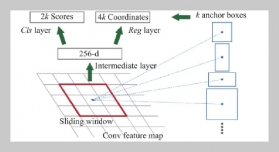

- [6] S. Ren, K. He, R. Girshick, and J. Sun, (2016) “Faster R-CNN: Towards real-time object detection with region proposal networks" IEEE transactions on pattern anal�ysis and machine intelligence 39(6): 1137–1149.

- [7] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, and A. C. Berg. “Ssd: Single shot multibox detector”. In: Computer Vision–ECCV 2016: 14th Euro�pean Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14. Springer. 2016, 21–37.

- [8] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi. “You only look once: Unified, real-time object detec�tion”. In: Proceedings of the IEEE conference on computer vision and pattern recognition. 2016, 779–788.

- [9] B. Liu, J. Luo, and H. Huang, (2020) “Toward auto�matic quantification of knee osteoarthritis severity us�ing improved Faster R-CNN" International journal of computer assisted radiology and surgery 15: 457–466.

- [10] L. Shen, H. Tao, Y. Ni, Y. Wang, and V. Stojanovic, (2023) “Improved YOLOv3 model with feature map crop�ping for multi-scale road object detection" Measurement Science and Technology 34(4): 045406.

- [11] J. Jang, D. Van, H. Jang, D. H. Baik, S. Duk Yoo, J. Park, S. Mhin, J. Mazumder, and S. H. Lee, (2020) “Residual neural network-based fully convolutional net�work for microstructure segmentation" Science and Technology of Welding and Joining 25(4): 282–289.

- [12] Y. Liu, B. Fan, L. Wang, J. Bai, S. Xiang, and C. Pan, (2018) “Semantic labeling in very high resolution images via a self-cascaded convolutional neural network" ISPRS journal of photogrammetry and remote sensing 145: 78–95.

- [13] J. Ding, J. Zhang, Z. Zhan, X. Tang, and X. Wang, (2022) “A precision efficient method for collapsed building detection in post-earthquake UAV images based on the improved NMS algorithm and Faster R-CNN" Remote Sensing 14(3): 663.

- [14] T. Bai, Y. Pang, J. Wang, K. Han, J. Luo, H. Wang, J. Lin, J. Wu, and H. Zhang, (2020) “An optimized faster R-CNN method based on DRNet and RoI align for building detection in remote sensing images" Remote Sensing 12(5): 762.

- [15] G. Hua, M. Liao, S. Tian, Y. Zhang, and W. Zou, (2023) “Multiple relational learning network for joint referring ex�pression comprehension and segmentation" IEEE Trans�actions on Multimedia:

- [16] W. Gaihua, L. Jinheng, C. Lei, D. Yingying, and Z. Tianlun, (2022) “Instance segmentation convolutional neural network based on multi-scale attention mecha�nism" Plos one 17(1): e0263134.

- [17] F. N. Iandola, S. Han, M. W. Moskewicz, K. Ashraf, W. J. Dally, and K. Keutzer, (2016) “SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and< 0.5 MB model size" arXiv preprint arXiv:1602.07360:

- [18] D. Sinha and M. El-Sharkawy. “Thin mobilenet: An enhanced mobilenet architecture”. In: 2019 IEEE 10th annual ubiquitous computing, electronics & mobile com�munication conference (UEMCON). IEEE. 2019, 0280–0285.

- [19] M. Tan, R. Pang, and Q. V. Le. “Efficientdet: Scalable and efficient object detection”. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2020, 10781–10790.

- [20] K. Han, Y. Wang, Q. Tian, J. Guo, C. Xu, and C. Xu. “Ghostnet: More features from cheap operations”. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2020, 1580–1589.

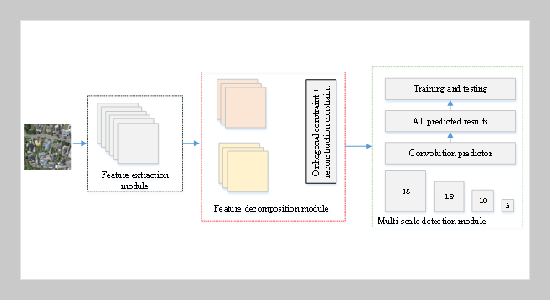

- [21] S. Yin, L. Wang, Q. Wang, M. Ivanovi´c, and J. Yang, (2023) “M2F2-RCNN: Multi-functional faster RCNN based on multi-scale feature fusion for region search in remote sensing images" Computer Science and Infor�mation Systems (00): 54–54.

- [22] M. Luo, S. Ji, and S. Wei, (2023) “A diverse large-scale building dataset and a novel plug-and-play domain gener�alization method for building extraction" IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing:

- [23] H. Cao, Y. Wang, J. Chen, D. Jiang, X. Zhang, Q. Tian, and M. Wang. “Swin-unet: Unet-like pure trans�former for medical image segmentation”. In: Euro�pean conference on computer vision. Springer. 2022, 205–218.

- [24] G. Wu, X. Shao, Z. Guo, Q. Chen, W. Yuan, X. Shi, Y. Xu, and R. Shibasaki, (2018) “Automatic building segmentation of aerial imagery using multi-constraint fully convolutional networks" Remote Sensing 10(3): 407.

- [25] Z. Zhou, M. M. R. Siddiquee, N. Tajbakhsh, and J. Liang, (2019) “Unet++: Redesigning skip connections to exploit multiscale features in image segmentation" IEEE transactions on medical imaging 39(6): 1856–1867.

- [26] L. Teng, Y. Qiao, M. Shafiq, G. Srivastava, A. R. Javed, T. R. Gadekallu, and S. Yin, (2023) “FLPK-BiSeNet: Federated learning based on priori knowledge and bilateral segmentation network for image edge extraction" IEEE Transactions on Network and Service Management: