- [1] V. B. Hans, (2016) “Water management in agriculture: Issues and strategies in India" SSRN Electron:

- [2] X. Kan, T. C. Thayer, S. Carpin, and K. Karydis, (2021) “Task Planning on Stochastic Aisle Graphs for Precision Agriculture" IEEE Robotics and Automation Letters 6(2): 3287–3294. DOI: 10.1109/LRA.2021.3062337.

- [3] India’s Rural Farmers Struggle to Read and Write. Here’s How ‘AgriApps’ Might Change That. - GOOD. https://www.good.is/articles/agricultural-apps-bridgeliteracy-gaps-in-india. accessed: Jul. 17, 2022.

- [4] We still can’t agree how to regulate self-driving cars - The Verge. https://www.theverge.com/2020/2/11/21133389/house- energy-commerce- self- drivingcar-hearing-bill-2020. accessed: Jul. 23, 2022.

- [5] Autonomous vehicles: How 7 countries are handling the regulatory landscape | TechRepublic. https://www.techrepublic .com/article/autonomous- vehicles -how- 7 - countries - are - handling - the - regulatory -landscape/.accessed: Jul. 23, 2022.

- [6] F. Zhang, W. Zhang, X. Luo, Z. Zhang, Y. Lu, and B. Wang, (2022) “Developing an IoT-Enabled Cloud Management Platform for Agricultural Machinery Equipped with Automatic Navigation Systems" Agriculture (Switzerland) 12(2): DOI: 10.3390/agriculture12020310.

- [7] T. S. Hong, D. Nakhaeinia, and B. Karasfi, (2012) “Application of fuzzy logic in mobile robot navigation" Fuzzy Logic-Controls, Concepts, Theories and Applications: 21–36.

- [8] I. Susnea, A. Filipescu, G. Vasiliu, G. Coman, and A. Radaschin. “The bubble rebound obstacle avoidance algorithm for mobile robots”. In: Cited by: 12. 2010, 540–545. DOI: 10.1109/ICCA.2010.5524302.

- [9] T. S. Abhishek, D. Schilberg, and A. S. A. Doss. “Obstacle avoidance algorithms: A review”. In: IOP Conference Series: Materials Science and Engineering. 1012.1. IOP Publishing. 2021, 012052.

- [10] A. Ohya, A. Kosaka, and A. Kak, (1998) “Vision-based navigation by a mobile robot with obstacle avoidance using single-camera vision and ultrasonic sensing" IEEE Transactions on Robotics and Automation 14(6): 969–978. DOI: 10.1109/70.736780.

- [11] S. A. Hiremath, G. W. van der Heijden, F. K. van Evert, A. Stein, and C. J. Ter Braak, (2014) “Laser range finder model for autonomous navigation of a robot in a maize field using a particle filter" Computers and Electronics in Agriculture 100: 41–50. DOI: 10.1016/j.compag.2013.10.005.

- [12] J.-K. Yoo and J.-H. Kim, (2015) “Gaze Control-Based Navigation Architecture with a Situation-Specific Preference Approach for Humanoid Robots" IEEE/ASME Transactions on Mechatronics 20(5): 2425–2436. DOI: 10.1109/TMECH.2014.2382633.

- [13] S. Sukkarieh, E. M. Nebot, and H. F. Durrant-Whyte, (1999) “A high integrity IMU/GPS navigation loop for autonomous land vehicle applications" IEEE Transactions on Robotics and Automation 15(3): 572–578. DOI: 10.1109/70.768189.

- [14] M. Gilmartin, (2005) “INTRODUCTION TO AUTONOMOUS MOBILE ROBOTS, by Roland Siegwart and Illah R. Nourbakhsh, MIT Press, 2004, xiii+ 321pp., ISBN 0-262-19502-X.(Hardback,£ 27.95)" Robotica 23(2): 271–272.

- [15] X. Gao, J. Li, L. Fan, Q. Zhou, K. Yin, J.Wang, C. Song, L. Huang, and Z. Wang, (2018) “Review of wheeled mobile robots’ navigation problems and application prospects in agriculture" IEEE Access 6: 49248–49268. DOI: 10.1109/ACCESS.2018.2868848.

- [16] K. Zhu and T. Zhang, (2021) “Deep reinforcement learning based mobile robot navigation: A review" Tsinghua Science and Technology 26(5): 674–691. DOI: 10.26599/TST.2021.9010012.

- [17] “Bosch’s Giant Robot Can Punch Weeds to Death - IEEE Spectrum. https://spectrum.ieee.org/bosch-deepfield-robotics-weed-control. accessed: Jan. 26, 2022.

- [18] A. Roshanianfard, N. Noguchi, H. Okamoto, and K. Ishii, (2020) “A review of autonomous agricultural vehicles (The experience of Hokkaido University)" Journal of Terramechanics 91: 155–183. DOI: 10.1016/j.jterra.2020.06.006.

- [19] W. Zhao, X. Wang, B. Qi, and T. Runge, (2020) “Ground-level Mapping and Navigating for Agriculture based on IoT and Computer Vision" IEEE Access: DOI: 10.1109/ACCESS.2020.3043662.

- [20] W. Yuan, Z. Li, and C.-Y. Su, (2021) “Multisensor-Based Navigation and Control of a Mobile Service Robot" IEEE Transactions on Systems, Man, and Cybernetics: Systems 51(4): 2624–2634. DOI: 10.1109/TSMC.2019.2916932.

- [21] F. Rovira-Mas, V. Saiz-Rubio, and A. Cuenca-Cuenca, (2021) “Augmented Perception for Agricultural Robots Navigation" IEEE Sensors Journal 21(10): 11712–11727. DOI: 10.1109/JSEN.2020.3016081.

- [22] N. Gupta, M. Khosravy, S. Gupta, N. Dey, and R. G. Crespo, (2022) “Lightweight Artificial Intelligence Technology for Health Diagnosis of Agriculture Vehicles: Parallel Evolving Artificial Neural Networks by Genetic Algorithm" International Journal of Parallel Programming 50(1): DOI: 10.1007/s10766-020-00671-1.

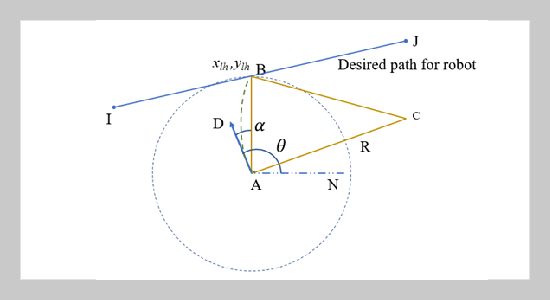

- [23] E. Horvath, C. Hajdu, and P. Koros. “Novel Pure- Pursuit Trajectory Following Approaches and their Practical Applications”. In: Cited by: 4. 2019, 597–602. DOI: 10.1109/CogInfoCom47531.2019.9089927.

- [24] X. Wang, G. Tan, Y. Dai, F. Lu, and J. Zhao, (2020) “An Optimal Guidance Strategy for Moving-Target Interception by a Multirotor Unmanned Aerial Vehicle Swarm" IEEE Access 8: 121650–121664. DOI: 10.1109/ACCESS.2020.3006479.

- [25] J. Lopez, P. Sanchez-Vilarino, R. Sanz, and E. Paz, (2021) “Efficient Local Navigation Approach for Autonomous Driving Vehicles" IEEE Access 9: 79776–79792. DOI: 10.1109/ACCESS.2021.3084807.

- [26] T. Bouwmans, (2014) “Traditional and recent approaches in background modeling for foreground detection: An overview" Computer Science Review 11-12: 31–66. DOI: 10.1016/j.cosrev.2014.04.001.

- [27] J. S. Kulchandani and K. J. Dangarwala. “Moving object detection: Review of recent research trends”. In: Cited by: 87. 2015. DOI: 10.1109/PERVASIVE.2015.7087138.

- [28] G. Qi, H.Wang, M. Haner, C.Weng, S. Chen, and Z. Zhu, (2019) “Convolutional neural network based detection and judgement of environmental obstacle in vehicle operation" CAAI Transactions on Intelligence Technology 4(2): 80–91. DOI: 10.1049/trit.2018.1045.

- [29] J. Borenstein and Y. Koren, (1989) “Real-Time Obstacle Avoidance for Fast Mobile Robots" IEEE Transactions on Systems, Man and Cybernetics 19(5): 1179–1187. DOI: 10.1109/21.44033.

- [30] J. Borenstein, Y. Koren, et al., (1991) “The vector field histogram-fast obstacle avoidance for mobile robots" IEEE transactions on robotics and automation 7(3): 278–288.

- [31] W. Zhang, H. Cheng, L. Hao, X. Li, M. Liu, and X. Gao, (2021) “An obstacle avoidance algorithm for robot manipulators based on decision-making force" Robotics and Computer-Integrated Manufacturing 71: DOI: 10.1016/j.rcim.2020.102114.

- [32] Z. Chong, B. Qin, T. Bandyopadhyay, M. Ang, E. Frazzoli, and D. Rus. “Synthetic 2D LIDAR for precise vehicle localization in 3D urban environment”. In: Cited by: 90. 2013, 1554–1559. DOI: 10.1109/ICRA.2013.6630777.

- [33] Y. Li and J. Ibanez-Guzman, (2020) “Lidar for Autonomous Driving: The Principles, Challenges, and Trends for Automotive Lidar and Perception Systems" IEEE Signal Processing Magazine 37(4): 50–61. DOI: 10.1109/MSP.2020.2973615.

- [34] U. Weiss and P. Biber, (2011) “Plant detection and mapping for agricultural robots using a 3D LIDAR sensor" Robotics and Autonomous Systems 59(5): 265–273. DOI: 10.1016/j.robot.2011.02.011.

- [35] O. C. Barawid Jr., A. Mizushima, K. Ishii, and N.Noguchi, (2007) “Development of an Autonomous Navigation System using a Two-dimensional Laser Scanner in an Orchard Application" Biosystems Engineering 96(2): 139–149. DOI: 10.1016/j.biosystemseng.2006.10.012.

- [36] V. Subramanian, T. F. Burks, and A. Arroyo, (2006) “Development of machine vision and laser radar based autonomous vehicle guidance systems for citrus grove navigation" Computers and Electronics in Agriculture 53(2): 130-143. DOI: 10.1016/j.compag.2006.06.001.

- [37] Y. Ji, S. Li, C. Peng, H. Xu, R. Cao, and M. Zhang, (2021) “Obstacle detection and recognition in farmland based on fusion point cloud data" Computers and Electronics in Agriculture 189: DOI: 10.1016/j.compag.2021.106409.

- [38] N. Li, L. Guan, Y. Gao, S. Du, M. Wu, X. Guang, and X. Cong, (2020) “Indoor and outdoor low-cost seamless integrated navigation system based on the integration of INS/GNSS/LIDAR system" Remote Sensing 12(19): 1–21. DOI: 10.3390/rs12193271.

- [39] M. Samuel, M. Hussein, and M. B. Mohamad, (2016) “A review of some pure-pursuit based path tracking techniques for control of autonomous vehicle" International Journal of Computer Applications 135(1): 35–38.

- [40] J. Lowenberg-DeBoer, K. Behrendt, M.-H. Ehlers, C. Dillon, A. Gabriel, I. Y. Huang, I. Kumwenda, T. Mark, A. Meyer-Aurich, G. Milics, K. O. Olagunju, S. M. Pedersen, J. Shockley, and D. Rose, (2022) “Lessons to be learned in adoption of autonomous equipment for field crops" Applied Economic Perspectives and Policy 44(2): 848–864. DOI: 10.1002/aepp.13177.

- [41] O. Bawden, D. Ball, J. Kulk, T. Perez, and R. Russell. “A lightweight, modular robotic vehicle for the sustainable intensification of agriculture”. In: 02-04-December-2014. Cited by: 24. 2014.