REFERENCES

- [1] Vinyals, O., A. Toshev, S. Bengio, and D. Erhan (2014) Show and tell: a neural image caption generator, Proc. of 2015 CVPR 2015 Computer Vision Foundation, Boston, England, 3156�3164.

- [2] Sak, H., A. Senior, and F. Beaufays (2014) Long short-term memory recurrent neural network architectures for large scale acoustic modeling, Proc. of Fifteenth Annual Conference of the International Speech Communication Association, Singapore.

- [3] Chen, X., and C. L. Zitnick (2015) Mind’s eye: a recurrent visual representation for image caption generation, Proc. of 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, England. doi: 10.1109/CVPR.2015.7298856

- [4] Soh, M. (2016) Learning CNN-LSTM Architectures for Image Caption Generation, https://cs224d.stanford.edu/reports/msoh.pdf.

- [5] Bernardi, R., R. Cakici, D. Elliott, A. Erdem, E. Erdem, N. Ikizler-Cinbis, F. Keller, A. Muscat, and B. Plank (2016) Automatic Description Generation from Images: a Survey of Models, Datasets, and Evaluation Measures, https://www.jair.org/media/4900/live-4900-9139-jair.pdf. doi: 10.1613/jair.4900

- [6] Xi, S. M., and Y. I. Cho (2014) Image caption automatic generation method based on weighted feature, Proc. of 2013 13th International Conference on Control, Automation and Systems (ICCAS 2013), Gwangju, South Korea. doi: 10.1109/ICCAS.2013.6703998

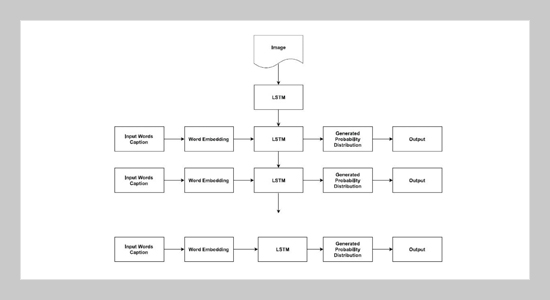

- [7] Wu, J., and H. Hu (2017) Cascade recurrent neural network for image caption generation, Electronics Letters 53(25), 1642�1643. doi: 10.1049/el.2017.3159

- [8] Kim, D., D. Yoo, B. Sim, and I. S. Kweon (2016) Sentence learning on deep convolutional networks for image Caption Generation, Proc. of 2016 13th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), 246�247. doi: 10.1109/URAI.2016.7625747

- [9] Chowdhary, C. L., and D. P. Acharjya (2017) Clustering algorithm in possibilistic exponential fuzzy c-mean segmenting medical images, Journal of Biomimetics, Biomaterials and Biomedical Engineering 30, 12�23. doi: 10.4028/www.scientific.net/JBBBE.30.12

- [10] Chen, J. L., Y. L.Wang, Y. J.Wu, and C. Q. Cai (2017) An ensemble of convolutional neural networks for image classification based on LSTM, 2017 International Conference on Green Informatics (ICGI), Fuzhou, China, 217�222. doi: 10.1109/ICGI.2017.36

- [11] Sundermeyer, M., R. Schluter, and H. Ney (2012) LSTM Neural Networks for Language Modeling, http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.248.4448&rep=rep1&type=pdf.

- [12] Feng, Y., and M. Lapata (2013) Automatic caption generation for news images, IEEE Transactions on Pattern Analysis and Machine Intelligence 35(4), 797� 812. doi: 10.1109/TPAMI.2012.118

- [13] Chowdhary, C. L., and D. P. Acharjya (2018) Segmentation of mammograms using a novel intuitionistic possibilistic fuzzy c-mean clustering algorithm, Nature Inspired Computing 75�82.

- [14] Li, J., and Y. Shen (2017) Image describing based on bidirectional LSTM and improved sequence sampling, Proc. of 2017 IEEE 2nd International Conference on Big Data Analysis (ICBDA), 735�739. doi: 10.1109/ICBDA.2017.8078733

- [15] Poghosyan, A., and H. Sarukhanyan (2017) Shortterm memory with read-only unit in neural image caption generator, 2017 Computer Science and Information Technologies (CSIT), Yerevan, Armenia. doi: 10.1109/CSITechnol.2017.8312163

- [16] Shah, P., V. Bakrola, and S. Pati (2017) Image captioning using deep neural architectures, Pro. of 2017 International Conference on Innovations in Information, Embedded and Communication Systems (ICIIECS), Coimbatore, India. doi: 10.1109/ICIIECS.2017.8276124

- [17] Sundermeyer, M., H. Ney, and U. Schluter (2015) From feedforward to recurrent LSTM neural networks for language modeling, IEEE/ACM Transactions on Audio, Speech, and Language Processing 23(3), 517� 529. doi: 10.1109/TASLP.2015.2400218

- [18] Chowdhary, C. L., and D. P. Acharjya (2016) Ahybrid scheme for breast cancer detection using intuitionistic fuzzy rough set technique, Biometrics: Concepts, Methodologies, Tools, and Applications 1195�1219.

- [19] Vijay, K., and D. Ramya (2015) Generation of caption selection for news images using stemming algorithm, Pro of 2015 International Conference on Computation of Power, Energy, Information and Communication (ICCPEIC), Chennai, India, 536�540. doi: 10.1109/ICCPEIC.2015.7259513

- [20] Wang, M., L. Song, X. Yang, and C. Luo (2016) Aparallel-fusion RNN-LSTM architecture for image caption generation, Proc of 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA. doi: 10.1109/ICIP.2016.7533201